Last week our new digital service GOV.UK moved out of public beta and replaced the two main government websites, Directgov and Business Link.

As my colleague Mike Bracken explained at the time, GOV.UK is focussed on the needs of users, making it simpler, clearer and faster to find government services and information.

Today we are releasing the beta version of our performance platform which is how we will use data to measure whether GOV.UK really is simpler, clearer and faster for users.

Knowing that there were 1.1 million visits to GOV.UK on 17th October, each one lasting on average 2 mins 46 seconds, is interesting in itself, but that isn’t enough to tell us whether this is a good level of performance.

Knowing that there were 1.1 million visits to GOV.UK on 17th October, each one lasting on average 2 mins 46 seconds, is interesting in itself, but that isn’t enough to tell us whether this is a good level of performance.

More importantly, if there are areas where we can improve, what should our product team do to make it better?

The GOV.UK performance dashboard

Building on the prototyping work we did in the summer we are starting with one dashboard containing five modules of information.

Each module has a clear purpose; a decision or an action that we can take depending on what is shown.

Our weekly visits and weekly unique visitors modules are simple but important. They allow us to see very clearly if those people who used Directgov and Business Link are now using GOV.UK.

If the weekly GOV.UK visits and unique visitors end up being too low then we need to understand where people are getting lost and improve our redirects and search engine optimisation.

Right now our focus is on the transition to GOV.UK and listening to feedback, but very soon we will begin to use that qualitative and quantitative data to look in detail at how to keep improving GOV.UK, how to make it even simpler, clearer and faster.

Defining success

The ‘Format usage last week’ module is one of the ways our product teams will be able to do this.

'Formats' are how we refer to the templates that each page of GOV.UK uses and we have four main formats in use on the site right now.

Our product managers and analytics team have worked together to define success criteria for each format by looking at existing data and feedback from user research to estimate what behaviour contributes towards a successful visit.

Sometimes it’s as simple as the time it takes to read a few sentences of text while on other formats we need to check additional criteria such as which navigational links are being used.

The initial criteria are shown below but a lot more work is required to validate these and we will also be looking at other forms of success data such as the level of trust or satisfaction that a user feels about their visit.

Guide format

e.g. Get a passport for your child

Success = user spends at least 7 seconds on the page, or clicks on one of the left hand section headings, or a link within the body of the page.

Benefit format

e.g. Jobseeker's allowance

Success = user spends at least 7 seconds on the page, or clicks on one of the left hand section headings, or a link within the body of the page.

Quick answer format

e.g. UK bank holidays

Success = user spends at least 5 seconds on the page.

Smart answer format

e.g. Maternity pay entitlement

Success = user clicks on 'Start now', answers all of the questions and then arrives at the final result page.

Each time a user visits a page on GOV.UK we record this as an ‘entry’. If the success criteria for that format are met then we also record a ‘success’.

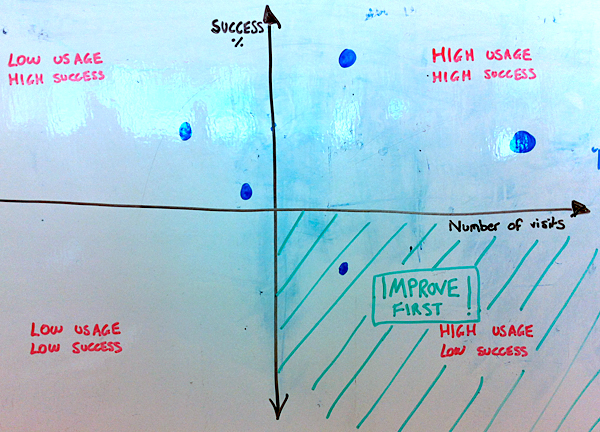

By adding up the ‘entry’ and ‘success’ counts within each format we calculate the percentage of visits that were deemed successful and plot these points on our axis.

We then use this to prioritise which format we should be examining in more detail to understand how it can be improved.

How smart is a smart answer?

Our first set of weekly data shows that around 80% of visits to Guides, Benefits and Quick Answers results in a successful visit. Smart Answers appear less successful, with only 50% of visits resulting in a completed journey.

So, what does this mean and what are we doing about it?

The criteria to judge a successful visit could be wrong, and Smart Answers in particular may have more demanding success criteria than for other formats. A 'conversion rate' of 50% is lower than for other GOV.UK formats, but conversation rates of 50% for many private sector transactions would be seen as highly successful.

From the four rounds of user testing we did before launch, we are confident that Smart Answers are a more effective format for helping users to find the answer to complex queries. Directgov previously relied on a large quantity of text, often across multiple pages, which users struggled to navigate successfully.

But we will be looking at the data for each individual Smart Answer page to see if there are specific improvements that can be made to the layout, navigation or content.

The success rates for all the formats will inevitably go up and down in the coming weeks. The most important feature for us is that we have a specific and transparent measure of success, which gives us a way of assessing the improvements we will make to the site.

Whatever happens, we'll be blogging about it in a couple of weeks to let you know what we find out.

What next?

We are releasing the performance platform as a public beta because we want to share with everyone our approach to measuring performance.

Our criteria for determining success will need to be refined as we learn more about how GOV.UK is being used and we will then be able to use this method to measure how sections of content are performing on the site.

We already have another thirty modules on our to-do list and it’s not all about web analytics for GOV.UK - we will look at other data sources and create dashboards for other products and services that benefit from this approach.

However, the purpose of our performance platform is clear - our teams need to make decisions and improve products by using data that tells them which things to examine first and how.

16 comments

Comment by Sebastian Danielsson posted on

Are you not defining success for the different formats anymore? I cant find the dashboards on /performance.

Comment by Davie Taylor posted on

Good question. Over the last year or so our focus has been on transitioning the 300+ government websites onto GOV.UK and understanding the impact of this change through analytics. Now we've completed transition we're actively working on the ways we use data and analytics to help us iterate and improve the the service, defining and understanding the success measures of different formats will likely be revisited as part of this process - watch this space.

Comment by André posted on

Hi, do you have statistics about how the users perceives the navigation of the site? I.e. XX% find navigating the site easy. I know it's not a good metric, but the sites I would like to compare with don't have the engagement nor the task completion rate numbers for comparison. The perception of the overall user experience would be great to look at as well.

Comment by James Thornett posted on

Hi Andre,

Thank you for your question. We are going to put a feedback survey live on GOV.UK within the next week to ask questions on subjects like how easy the site is to use. We will then be able to compare this information with the data we get from our analytics and will keep publishing our latest performance measures on this blog and on the Performance Platform.

James

Comment by baragouiner posted on

Interested in "Quick answer format e.g. UK bank holidays. Success = user spends at least 5 seconds on the page."

This would result in a user getting a quick answer to their question in 10 minutes would be counted as a success.

Wouldn't a maximum time spent on the page be more appropriate?! = User spends no more than 8 seconds on the page.

Comment by alexcoley posted on

James, how come you are using page grips and secondary clicks as KPIs when Tom Loosemore made such a big point out of completion rates? http://www.whatdotheyknow.com/request/performance_metrics_for_jobseeke#incoming-145781

Comment by James Thornett posted on

Hi Alex - Our attempt to define success measures for all types of pages on GOV.UK is absolutely aligned with the idea of a completion or conversion rate. Some pages align very closely with this and we are tracking the number of people that make it from the 'start' to the 'end' of a piece of content where user interaction is required.

For other page types such as those in the quick answer format (e.g. https://www.gov.uk/bank-holidays) we are defining criteria which give us the best indication of the 'rate of completion' for users arriving at this page. In this instance the key metric is how long it will take the average user to read the information on the page.

James

Comment by alexcoley posted on

Thanks for your follow up James. Does this mean that you will be measuring (and most importantly, publishing) 'rate of completion' for the Jobseekers Allowance Online Application?

Comment by James Thornett posted on

Hi,

The Jobseekers Allowance Online Application is currently owned and managed by the Department for Work and Pensions so we are unable to use GOV.UK data to measure the rate of completion for this transaction. However, we are working with all government departments to help them get better analytics data for their transactional services.

Comment by Charlie Hull (@FlaxSearch) posted on

It'll also be interesting to see how users carry out searches - for example which result they click after a search (if any) and what they do when they get there - this should give you some idea of whether the user thinks the search actually answered their question. If you can start logging that kind of information there's the possibility to feed this data back into the search rankings to improve relevance. It's also worth logging spelling suggestions to spot common errors, which again can be fed back.

Comment by James Thornett posted on

Hi Charlie - absolutely, search is a very interesting source of data which can help us understand how people are looking for content on GOV.UK and potentially improve the actual search experience.

At the moment this is not available on the performance platform (although we are looking at the raw data internally) but it's great to hear feedback on what data people would like to see made available.

Comment by Andy Paddock posted on

Taking of search is it possible to record second or third searches, they would show what people are searching for without getting a suitable result that they wanted to click on?

Comment by James Thornett posted on

Hi Andy - We hope to add the Search Results page format to our 'format success' module fairly soon and we are looking at all kinds of data to try and define the criteria which will determine whether a search experience is judged to be a success or not, each time a new search is carried out.

James

Comment by Andy Paddock posted on

I take it from the dashboard you are using google analytics, are you able to get all the stats required by cabinet office?

If you've had to add some bells and whistles would those extras be available to other government departments?

Comment by James Thornett posted on

Hi Andy - yes, the source of web usage data for GOV.UK is currently Google Analytics.

We've not added any special bells and whistles but one reason for creating a performance platform like this is so that we are able to collect data from more than one source and combine together into useful graphs and visualisations... more on this soon I hope!

Comment by Andy Paddock posted on

I take it from the dashboard you are using google analytics, are you able to report all the things cabinet office say you should?

If you've had to add some bells and whistles would that work be available to other government departments?