'I love this site! …This is perhaps the finest example of a government website in the history of the Internet.'

Member of the public'What idiot thought a single web site was a good idea? The separate ones are bad enough.'

Civil servant

These are genuine comments at the extreme ends of the feedback we received for the Inside government beta. Over the six weeks of the beta we received a lot more in between, and we were grateful for every last item of praise and criticism.

This post is about how we captured that feedback, what we learned from it and what we are going to do as a result.

Recap

We released Inside government as a beta on February 28th 2012, following 24 weeks of iterative development. The idea being to test - on a limited scale - the site that may come to accomodate all departmental corporate websites. The beta ran for six weeks in the public domain and involved 10 pilot departments (BIS, Cabinet Office, DCLG, Defra, DFID, DH, FCO, HMRC, MOD and MOJ).

By releasing Inside government we were testing a proposition (‘all of what government is doing and why in one place’), and two supporting products (a frontend website and a content management system). With this in mind, we wanted to ensure that we captured feedback from the public and from colleagues across government.

We wanted to know if - having used the site - people thought it was a good idea and whether it should be developed further. And besides testing the viability, we hoped that feedback would prove a rich source of ideas and steering on what was important for us to concentrate on in subsequent iterations.

Open feedback channels

The most obvious and noisy sources of feedback were, of course, ‘open channels’ which included Twitter, email, our service desk, our GetSatisfaction forum, and some people even had our phone numbers.

The value of these routes lies in their diversity and because they provided the opportunity for the project team to engage directly with the end users. They were particularly popular with public users, but we were pleased to see that civil servants also took up these opportunities to put forward their views and get into discussion with others.

Here's a cross-sample of the comments that came in:

'Love the product! The concept of GOV.UK is the right way to go.'

Member of the public'The design feels far more people-friendly, the language (of the site architecture as well as the content) feels like it has the right balance between being friendly and expert'

Member of public'I have looked through the test website and I think it is an excellent idea. I like the news reports and I like the idea of one website for all departments.'

Civil servant'I think the concept of gov.uk is sensible but there's a way to go yet to get this website working well.'

Civil servant'Looks very basic and home made'

Civil servant'I don't know why you'd need a new website. Why not just add a section on how government works to direct.gov (sic)'

Civil servant

Our open channels were a particularly rich source of product enhancement ideas. Examples included: adding a section on the mechanics of how government works, incorporating section-specific searches, and suggestions of what data to include in feeds.

While there was lots coming through the open channels, much of it was granular and from an engaged audience predisposed to take an interest and have an opinion. Highly valued stuff but only part of the picture, and so our evaluation squeezed three further tests into the time available.

User interviews

Inside government should be open and accessible to everyone and we expect the core users to be people with a professional or deep thematic interest in the policies and workings of government.

To get qualitative insights into how these users used and rated gov.uk/government, we arranged 12 face-to-face interviews (with professionals from academia, charities, media and the private sector). Each participant knew that they would be asked about their internet usage but they were not aware which site(s) they would be discussing.

Interviews were conducted on a one-to-one basis by a trained facilitator and began with a discussion of how the individual used central government's current websites. It was evident that although a user gets to know their way around a particular section of a site, when they move off that section or onto another department's site the inconsistencies present real frustrations.

'It is tricky because currently you have to go to each department individually and its only done with civil servants in mind.'

Member of public

The facilitator then pulled up Inside government. The participants began with a cursory browse and were then set tasks designed to move them through the site's content and functionality. As they carried out the tasks, the facilitator asked them to comment on how well they felt the site was performing.

We learned that these professionals found the user interface clear and intuitive. There were common criticisms; a number of which were arguably down to the 'rough' nature of the beta, but important to heed as the site develops and takes on more content. These included issues with long lists, the visibility of 'related content' in columns, and the depth of content (some participants worrying that it was being ‘dumbed down’). Perhaps the most interesting finding being that participants wanted more of a departmental lead in the navigation, rather than the thematic approach that we were trialling.

Fundamentally, when asked, the participants were able to articulate who is responsible for the site, what its purpose is and who the users are likely to be. In this sense, the proposition of Inside government was clear and, they said, with some development this was a product that they welcomed because it would make their work easier.

- Download the Inside government qualitative testing (PDF, 108kb)

Usability testing

With insights from the core users bagged, we were hankering after feedback from general public users (who we expect to be infrequent visitors to corporate sections of government websites and who have basic or no prior knowledge of the machinery of government). What would they think of the proposition and would they be able to find what they wanted easily?

Using the GDS' summative test methodology, we put Inside government to the scrutiny of a panel of 383 users. The respondents were a mix of ages and gender, they were geographically-spread throughout the UK, participated in their home environment and there was no moderator or facilitator.

The tests use a range of measures to assess performance (such as journey mapping and completion times). Participants were prompted (by software) to find specific information (facts and figures) on the site through five tasks (which were tracked) and at the end of the tasks they were asked a series of questions about their experience.

We were pleased to see that on all but one of the tasks the successful completion rate was above 60%, and more than 50% of participants said they found it very or quite easy to complete the tasks. That was a positive overall trend on an unfamiliar and content-heavy site.

Below the overall trend, a few issues of concern were flagged up. Just over a third of participants found it difficult to complete the tasks, especially when it came to finding a specific piece of information on a page. Most participants described the content as 'straightforward', 'to the point' and 'up to date', while some said it was 'longwinded' and 'complicated'. Over 50% of participants said they thought Inside government contained 'the right amount of information' but 39% thought there was too much.

From these findings, we were satisfied that in the beta we had build a site usable by the general public, but there is clearly a great deal of work still to be done to produce an excellent ‘product for all’.

- Download the Inside government summative test results (PDF 2mb)

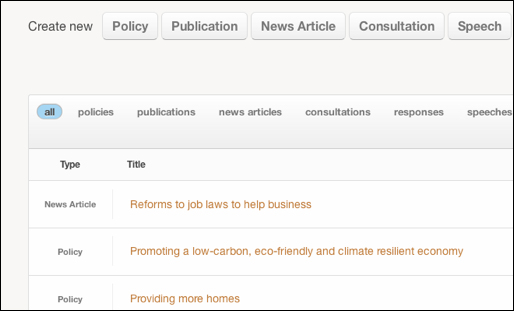

Comparative CMS Tests

We wanted to tackle one of the common complaints government digital teams have about their digital operations: cumbersome, convoluted and costly content management systems. So we decided to try building a CMS from scratch that would be stable, cheap to run, include only the functionality required to manage the Inside government site, and be easy to use by even the most inexperienced government staff.

To test what we produced, we ran structured testing in departments, using their standard IT and setting Gov.uk in head-to-head tasks with their existing CMS. The aim was to assess the performance and usability of the beta publishing application, and understand where government publishers found it to be better, lesser or equivalent quality compared with what they were currently using. And, we wanted to get their ideas for further iteration.

We ran tests in six departments against five incumbent products with seven participants, who were from digital publishing teams. The participants were a mix of those with previous or no experience of using the Gov.uk publishing app, and each was given up to five tasks representing common 'everyday' CMS functions.

In all but one task, users completed tasks faster or in comparable times. The reason for the slower task was down to the users lack of experience with markdown formatting method (and even then it was only in one specific area - bulleted lists).

Taking into consideration all the tasks they were asked to complete during the tests, the users made positive comments about their overall experience of the Gov.uk publishing app. For most it was easier to use, better laid out and faster than their current content management system because it was customised to their specific professional needs.

'The CMS is a dream - especially compared with the current [product name removed] system. It's fast, user friendly and intuitive. It's also easier to use visually.'

Civil servant

- Download Inside government CMS comparative testing (PDF, 72kb)

What we learned

We didn’t get to do as much testing as we would have liked. Time was against us. But from what we did do, we learned a lot.

Problems include:

- Big improvements in findability need to be made if Inside government is to be able to cope with the weight of content from all departments and agencies

- We need to adjust to make departments more prominent in the navigation and on pages

- In trying to improve the readability and comprehension of the corporate content, Inside government needs to be careful not to over-simplify

Noted.

Overall, we tested well and the positives rang out louder:

- People understood the concept and valued the proposition

- The site design was applauded

- The publishing app was lean and easily stood up to the pressures of real use

People were impressed by what GDS and the 10 participating departments had achieved and wanted to see more. No doubt, there is development to do but this thing can work.

Crucially, by conducting these tests we now have benchmarks against which to measure the performance of future releases of Inside government. This data was not previously available to the Inside government team in the way it was for those involved in the other betas to replace Directgov and Business Link.

We will plough the learning into forthcoming iterations and we will continue to run testing regularly and report back on the findings.

If you want to tell us about your experience of the Inside government beta, leave a comment or drop us an email - govuk-feedback@digital.cabinet-office.gov.uk.

Ross Ferguson is a Business Analyst for the Inside government project.

28 comments

Comment by Inside Government – traffic, demand and engagement numbers so far | Government Digital Service posted on

[...] figures are as the departments expected and in line with what we predicted. Following on from the Inside Government beta, we projected that Inside Government – with just the ministerial departments on board – [...]

Comment by A quick tour of Inside Government | Government Digital Service posted on

[...] Feedback isn’t just for Cobain and Hendrix – what we heard from the Inside Government beta [...]

Comment by What we know about the users of Inside Government | Government Digital Service posted on

[...] Our most recent user testing last week involved one-to-one qualitative testing with a 12 people who were all regular or frequent users of the DCLG and DFT websites. We asked them to tell us about their use of current government sites and then asked them to try meeting their needs on Inside Government and to compare it to what they were used to (just as we did back in our beta days). [...]

Comment by From Whitehall to Kingsway… my big move forward and back « BASIC CRAFT posted on

[...] I’m involved in at GDS. You can also read a recent post I published on the GDS blog about the evaluation of the Inside government beta. It’s part of a triptych that starts with Neil Williams on why we did [...]

Comment by Fiona G posted on

Hi Ross,

Hope you are well in GDS-land. I was surprised to see nothing mentioned about the search function, which I felt was the weakest aspect of the beta. Did you not get feedback on this? Are there plans to improve it - as you know I passed you examples of some poor results. I think a really good search tool is vital for this site...

Comment by Ross Ferguson posted on

Hi Fiona,

Agreed, search is crucial on a site so heavy in content.

Making search work for the Inside government and across the entirety of Gov.uk was something we spent a good amount of time thinking about for the beta. But because of the very limited content we were working with, we only put in as much effort as was required to deliver the minimum viable product for the beta period. This meant that the search returned results from Inside government but directed you to search again on other areas of Gov.uk if you didn't find what you were looking for.

Feedback we received was limited. We saw in the structured tests that a minority of users defaulted to using search to navigate the site. We also saw that some users expected the search to be specific to the section they were in at the time (as opposed to serving up results from the entire site). So limited but valuable insight.

Now that development has kicked off again - for real this time - we will be giving search a lot of love.

Ross

Comment by Graham posted on

The flaws in the testing speak for themselves.

On the second point, are there really people who need to know that the Right Honourable Andrew Lansley CBE MP is the minister responsible for updated guidance on the diagnosis and reporting of Clostridium Difficile?

Comment by Graham posted on

I wonder if the results of the user-testing exercise would have been so good if they hadn't asked leading questions, or given big clues in the way they were worded?

For example:

"Please find the latest government policy on Reforming higher education and student finance in England."

Not surprisingly, people looked in 'policy', the sixth word in the question, and then searched for the policy with that title.

"Can you now find the consultation for the MOD's strategic equality objectives for 2011-14 and indicate when it is closed"

Again, the clue is in the question - in the sixth word, so users looked in the section with that name, and presumably the consultation had the same title.

The only one they appeared to really struggle with was the speech, perhaps because that wasn't advertised in the primary navigation, and there was a high proportion of searches for task 3 because it used the word 'guidance' rather than 'publications'.

The research may have also benefitted from testing real user needs - eg I can't imagine many people are interested in the 'diagnosis and reporting of Clostridium difficile' or the 'MOD's strategic equality objectives for 2011-14'.

Comment by Ross Ferguson posted on

Thanks for reviewing the report and for the comment, Graham.

I obviously disagree with your assessment that the questions were leading or that we weren't testing against genuine needs.

On the first point, the questions were designed by an experienced team independently of the product development team. I don't think there were unfair 'clues' in the wording because we had section titles such as 'consultations' and 'news'. If we had said 'look in the top nav and click on policy, then go to policy x and scroll down to the second section and in there find fact Y', I think that would qualify as a 'big clue'.

On the second point, the purpose of Inside government is to serve up detailed corporate information on often complex issues and policies. The regular users are likely to have a professional interest but there will also be occasional interest in specific areas from a 'general' public. So I am happy that for the beta, these were legitimate tasks to set; more so because we were dealing with a limited set of content and ultimately we were testing the IA and interface more than anything else in these tests.

Besides the summative testing that you pick up on here, we had two further structured tests as well as the open feedback. Taking all these into consideration is what led to our appraisal that the beta had tested well.

As I say in the post, our testing is going to get more frequent and more sophisticated as the site gets bigger, greater volumes of real content get upload and as real users begin to use it in real situations. We'll keep releasing the results and the methodologies we employ as part of that testing, so do keep an eye on us to see if we are improving.

Comment by James Geddes posted on

(I am a civil servant). Good luck -- this stuff is not easy. FWIW, I love Markdown -- and there are a number of civil servants using a similar system in my department without any problems. (I suppose you'd have to ask them their preferences vs. Word.) Just for amusement, maybe I can weigh in with some observations on IT -- I care a lot about IT, which you can take as either a reason to believe or to not believe that I have anything relevant to say.

(1) There are some people who write code and some people who write English: those few who do both have created, and use, non-WYSIWYG, text-based tools (like Markdown). That has to be a valuable data point.

(2) There are enormously powerful, yet logically coherent, open-source version-control and collaboration tools out there which work with text-based documents (git, mercurial, etc). We use none of them. Instead, we email Word documents round and round and argue about who has the master copy. I can't talk about this without beating my head against the table, so I'd better stop.

(3) Can software be both easy-to-use and powerful? Maybe, maybe not. What I think is true is that we're a long way from using computers in a way that maximises how productive we are, and that whatever changes are required to make that happen are going to involve us learning new skills and new ways of thinking. But you know what? We're pretty smart. I mean, right? It's our job to understand stuff. All of us are capable of adapting -- the question is, will we have the tools to adapt to?

James

Comment by Ross Ferguson posted on

Cheers James - these are good points well made and some searching questions.

Comment by Thinking about the Inside Government alpha feedback | Digital by Default posted on

[...] is alot to love in the latest bout of extreme transparency blogging from the GOVUK team. Sharing the results of the feedback and user testing for the Inside Government [...]

Comment by baragouiner posted on

"the site that may come to accomodate all departmental corporate websites"

may? or will?

Comment by Ross Ferguson posted on

Will, in the sense that the platform will house them. May, in the sense that the model we trialled in the beta is likely to change and that change may be radical.

Comment by baragouiner posted on

Thanks Ross - good to meet you yesterday!

Comment by David Durant (@cholten99) posted on

I continue to be impressed with the thoroughness of GDS's testing methodology. I do wonder if the civil servants providing the positive feedback are being encouraged to become in-departmental evangelists for the new way of working.

I agree with your comment that WYSIWYG editors tend to make people think about layout vs content (the old LaTex vs Word arguement) but currently one of the biggest hurdles GDS needs to overcome from civil servants is "oh no, not something else to learn" so perhaps some give might make sense on this one.

It's interesting to see R Walter bring up the subject of tagging pages. Has there been any serious discussion of taxonomy across GDS sites and using tags to help users find and understand the type of pages they are looking for?

Comment by Ross Ferguson posted on

David - thanks for the sharing your comments and suggestions.

We are a small project team so we are definitely encouraging colleagues in departments to be advocates and promote awareness and discussion in departments.

The WYSIWIG vs markdown debate is one we need to test more thoroughly. Evidence is needed to solve this issue and of course it can evolve over time. We can be fleet of foot when it comes to change.

Taxonomy is another area a cross-proposition collaboration. It needs to work across the corporate offering of Inside government and the offerings of the mainstream services for citizens and businesses. We are studying who is doing this well at the moment and suggestions of who to look at would be appreciated.

Comment by juliac2 posted on

I too am pleased and hugely impressed by the thorough testing being carried out by the GDS team into this pilot project. However, can I flag up that this is not a completely new way of working? We in DFID have taken user testing seriously ever since we first launched a public website - it is feedback that has guided every iteration and redevelopment we have done - an example being clearlystated in this recent case study published by Fluent - and agency we worked with: http://fluent-interaction.co.uk/casestudy_dfid.html

Some of us are already evangelists for continuous improvement and listening to users - GDS didn't invent the concept!

Comment by R Walter posted on

I laughed when I read some of the feedback from the civil service users, and then found it rather depressing to see the same old attitude and 'departmental' thinking. Completely at odds with user-centred thinking. As a member of the public nothing interests me less than the latest name for BIS or some other department.

It was interesting though to see that some testers queried the navigation by theme rather than department. In my work, I've seen two reasons for this:

1. When the public wish to interface with a government service by phone or letter, they still need to know which department is responsible for what (unfortunately).

2. When removing an established and expected IA (ie navigation by department), you are asking your users to trust you: trust that you comprehensively cover everything they need and that they won't have to go on the department website for the fine detail. This takes time as well as a very thorough and comprehensive IA.

How about easing this by tagging pages with the appropriate department's name? It will allow the curious/frustrated user to get to know who's responsible for what.

Secondly, it would be interesting to ask users to run your tests again but without using gov.uk and instead relying on departmental websites and Google.

Comment by Ross Ferguson posted on

The 'department-anchor' insight was a great.

There's certainly no suggestion of creating a mono-department site, so we need to amplify the presence of the departments more. We had tags on every page to tie them to one or more departments, but they were often overlooked. Changing the position of the 'department' tab in the nav is also something we need to test.

The thematic stuff still has a place and we want to tune that some more based on the demand expressed in some of the feedback and the examples of this working elsewhere that we have seen (Worldbank data site, UK Parliament and Pew Internet, for example).

Big yes to more testing - more often and more nuanced.

Comment by letsallplaygolf posted on

I think I've got to agree with the comments above, I'm not convinced markdown is the right choice for this website. What are the advantages to, say, ckeditor configured in the strictest possible way?

The 'critical' comments attributed to civil servants are dis-heartening to say the least. I won't be too harsh, and of course I know all feedback, good and bad, is valuable - but I feel these people have missed the point of this project.

Bravo to the gov.uk team. Stellar work.

Comment by Ross Ferguson posted on

Thanks for the views and encouragement.

Comment by lazyatom posted on

One reason to avoid using a typical WYSIWYG editor is that using one can encourage writers to think about presentation (i.e. "give this paragraph a larger font size" or "put that image exactly there"), rather than focussing on the content itself.

I'd agree that Markdown isn't ideal, but I think there's definite value in avoiding an interface which allows text to be formatted arbitrarily. I would not be at all surprised if the future evolution of the CMS moves towards something that feels more WYSIWYG, but is still storing the content in a formatting-independent way.

Comment by Jennifer Anne Isabel Poole posted on

Ross, this is a really interesting post and I love your 'users' pic as well - saw this when Macon Philips instagrammed it! Just one thing - I feel that by attributing all the stupid of unhelpful comments to civil servants you're tarring everyone with the same brush. Civil Servants are users too, and a lot of them don't even know the difference between the internet and intranet let alone have a wonkish level of insider info.

Comment by Ross Ferguson posted on

Hi Jenny

Thanks for the comments.

There were positive and negative comments from our Civil Service colleagues; examples of both are included in the post. No tarring or feathering intended. It's perhaps just that some the civil servant comments were the best quotes for the purpose of bringing the evaluation to life.

I think it's right to attribute a comment from either a member of the public or Civil Service in this context because of the way that we split out the tests, and because most of the civil servants commented in their 'official capacity'.

We were very mindful right from the off to regard civil servants as users; users of both the frontend and the CMS. Indeed, some some the user stories we used in the development concentrated solely on the needs of civil servants.

Cheers

Ross

Comment by Jennifer Anne Isabel Poole posted on

Agree with fats, I'm not keen on markdown, it feels like when I had to learn to use MS-DOS retrospectively in my first job

Comment by Fats Brannigan posted on

Can it be true that you'll expect your CMS users to learn Markdown, rather than a simple WYSIWYG editor? That's just wilful nerdery for the sake of it.

Comment by Ross Ferguson posted on

Hi Fats

Thanks for the question.

It's not the case that we do things just for the sake of it, in fact that's the opposite of what we are about. But we are very sold on experimentation and that's why we wanted to trial markdown as a means of formatting while keeping the code clean and consistent.

In the main, it worked well with our sample of publishers. Most hadn't come across it previously but they quickly got used to it. The issue we had when it came to the tests was actually one of below par instructions. We had a guide in the editing screen but it wasn't clear enough. Better guides are needed but also more testing as we go through the next iteration.

Cheers

Ross