This post was contributed by Joanne Inskip, Senior Customer Insight Manager at the Government Digital Service:

At the start of 2011, the GDS Customer Insight team were given the task of developing a research methodology that could:

- Measure the performance of digital government services (specifically: task completion rates, time taken, drop out points, user comprehension and satisfaction)

- Be used on the live services as well as those in development

- Blend behavioural data with perception data

- Be rolled out across government to provided consistent measures for digital transactions

As a result, a study called the Summative Test was born. The name of the test is meant to signify finality. Its primary purpose is to measure a service’s performance, rather than to inform interaction design. The test is administered when a service is live or at the end of the design iteration cycle (but pre-build) for new services.

How it works for live services

We automatically intercept a sample of users on the Directgov site and ask them to take part in a research study. The survey is triggered from the pages on Directgov that drive the most traffic to the transaction in question. An important element is that it is a ‘true intent’ study. This means that the user has come to the site with the purpose of completing (or trying to complete) the task that you want to measure. Consequently, users approach the task and the study with real life expectations, goals and needs.

The study is similar to a traditional online survey but it has a twist. After the user opts to take part they are asked to download a small piece of software onto their machine. The download is simple (just a few straightforward ‘continue’ clicks) and it is this element that allows the Insight Manager to track actual user behaviour.

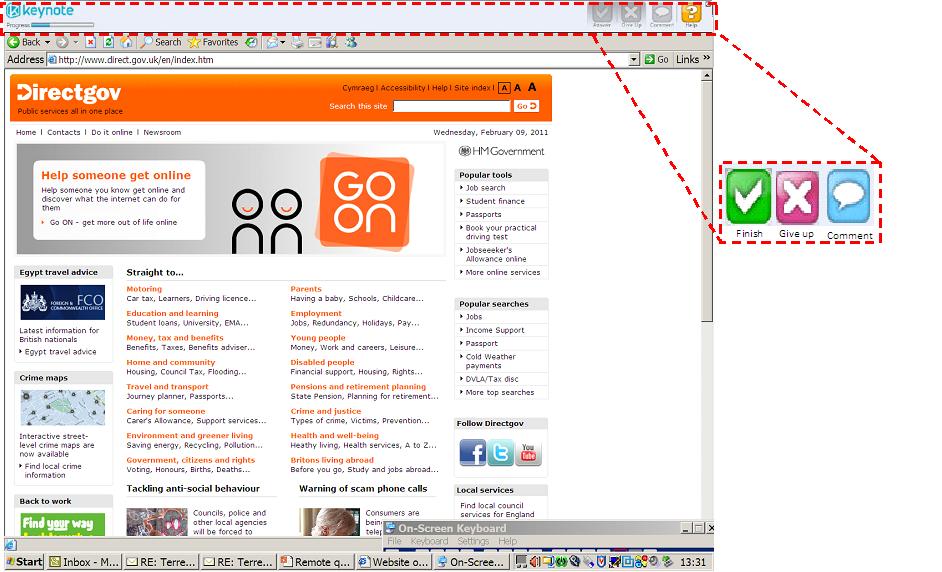

Once the download finishes, a few standard survey questions are asked to measure users’ "pre" task expectations. The user is then asked to try and complete the task that they originally came to Directgov to do and is encouraged to behave as they would normally. The only difference to the user experience is a toolbar that is placed at the top of the computer screen (see the illustration below). This provides users with a reminder of their task and gives them the option to hit ‘finish’, ‘give up’ or ‘comment’ at any stage during their journey. The user is then left to navigate their way through the site and to try and complete the task until they tell the software that they have finished. When the user hits ‘finish’ or ‘give up’ in the toolbar the remainder of the survey appears to measure ‘post’ task experiences.

Pilot study using the Jobseeker’s Allowance service

To test the methodology, the GDS Insight team ran a pilot study in June 2011. The pilot took place with support from colleagues at Job Centre Plus, as the study focused on the user task of trying to apply for Jobseeker’s Allowance online through Directgov. This is a complex transaction that allowed us to thoroughly road test the methodology. The survey ran for a week and collected 266 responses.

Lessons learnt

The pilot study returned a wealth of data, which we in the Customer Insight team then analysed. We learnt a lot during the implementation and analysis phases, particularly about the performance of the Jobseeker’s Allowance service. From a methodological point of view, the following two key findings stand out and will impact the roll out of the methodology across government in the future:

Services need to be designed with analytics in mind (thanks to Ash for the phrase!)

Because of the nature of the Jobseeker’s Allowance service, pages are delivered up to the user in a dynamic fashion. This means that there is essentially no concept of ‘a page’ and instead questions are presented to users based on their previous inputs and answers. This is fine from a user perspective but it causes problems when it comes to gathering and interpreting behavioural data.

In essence, there is no ‘hook’ within the data for orientation. You cannot assess where people are within the journey or at what stages they drop out because all of the URLs are dynamically generated. Looking for patterns in behaviour is therefore meaningless because each user journey is completely unique.

This is not something that we had anticipated but it is a key finding for digital government. In essence, services need to be built with analytics in mind so that the right data can be extracted once a service is live. At the very least services need to be built with unique page/section titles so that the title can be picked up by the tracking software. At best each page should contain a unique identifier so that analysis can be done at the deepest level.

The download attracts confident users

One of the survey questions asked users to rate how confident they felt when using the internet. The vast majority of users perceived themselves as highly/quite confident when using the internet. This is perhaps not surprising given the methodology and the fact that participants had to download a piece of software in order to take part. However it does raise a debate about how representative the methodology is for certain target audiences and to what extent the data can be applied to the entire audience group. What we can say is that the data represents a ‘best case’ scenario and that measures, such as completion rates and time taken, are based on the efforts of a service’s most competent and confident users.

In the future we want to explore how (if at all) the data changes when the study’s sample is more representative, including those that are less digitally savvy. One option being considered is to run a benchmarking study in a Job Centre. This would involve using digitally assisted PCs, where the download is already installed before the user sits down at the machine to begin their task.

What now?

We plan to keep testing and refining the methodology. The ultimate goal is to package up the study so that it can be deployed across all online government services. The next step is to run the study on a service that is still in development. This will allow us to assess how well the methodology works on a prototype site with respondents recruited from an online panel.

We would also like to run a Summative Test that collects field input data (we did not collect this type of data in the JSA pilot). Gathering this type of data will allow us to measure and improve form accuracy, which costs government hundreds of thousands of pounds in avoidable contact. This is a controversial topic due to concerns about data storage, lost personal data and civil servants’ general risk adverse attitude to data collection. Whilst these views are founded, digital government needs to weigh up the risks of collecting a research samples worth of personal data against the financial benefits that could be gained from reducing form error. To start with we intend to run the test on an uncontroversial transaction that does not require particularly personal field inputs. Hopefully this will allow us to case study the benefits of collecting this type of information.

The Customer Insight team’s view to date is that the Summative Test produces a wealth of rich data that isn’t available anywhere else and that can be used to directly influence the completion rates of digital services. We are keen to test the study further and evaluate its full potential. More to follow…

8 comments

Comment by This week at GDS | Government Digital Service posted on

[...] summative testing on Inside Gov this week to give us an idea how it’s performing with users. We’ll share the [...]

Comment by Feedback isn’t just for Cobain and Hendrix – what we heard from the Inside government beta | Government Digital Service posted on

[...] the GDS’ summative test methodology, we put Inside government to the scrutiny of a panel of 383 users. The respondents were a mix of [...]

Comment by Quantitative testing Betagov content and layout | Government Digital Service posted on

[...] up conventional face-to-face usability testing that was conducted in December 2011, and used GDS’s Summative Test methodology, which lets us reach a large, representative sample of 1,800 online users cheaply and [...]

Comment by Helen Hardy posted on

Think it's great that you're planning to benchmark this - the trouble with any kind of pop-up or similar is that it can be self-selecting - people only respond if they are confident. Also, the danger can be they only respond if they've had a particularly negative or positive experience but I like the approach of going through the experience with them (if only you can get those page details to work...)

Comment by Service Delivery in Government posted on

[...] entry entitled, Piloting new ways of measuring success on the UK Cabinet Government Digital Service blog discusses how the Customer Insight Team have [...]

Comment by Measuring the success of digital government services | Service Delivery in Government posted on

[...] recent post on the UK Cabinet Office Government Digital Service Team blog, Piloting new ways of measuring digital success discusses their work developing a research methodology to measure the success of online services. [...]

Comment by PJ posted on

"In essence, services need to be built with analytics in mind so that the right data can be extracted once a service is live." -- yes i believe this is key. You can also use crazy egg and clicktale to get more insight from your useability studies...

These are standard in conversion rate optimization tests done by ecommerce sites. It's good you've created a bespoke package here though, interesting...

Comment by Tony Gilbert posted on

A very interesting approach. Do you feel that the method may have skewed the participation towards confident users? Perhaps less confident users would have been put off by having to download and install a piece of software on their computer?

We currently use random (1:20) popup surveys to sample customer satisfaction and task completion, with pretty good success rates. We are considering linking these to analytics and to session recordings so we can look at the (anonymised) user experience of participants in more detail. This approach requires no additional client software.

Let me know if you're interested in more information.